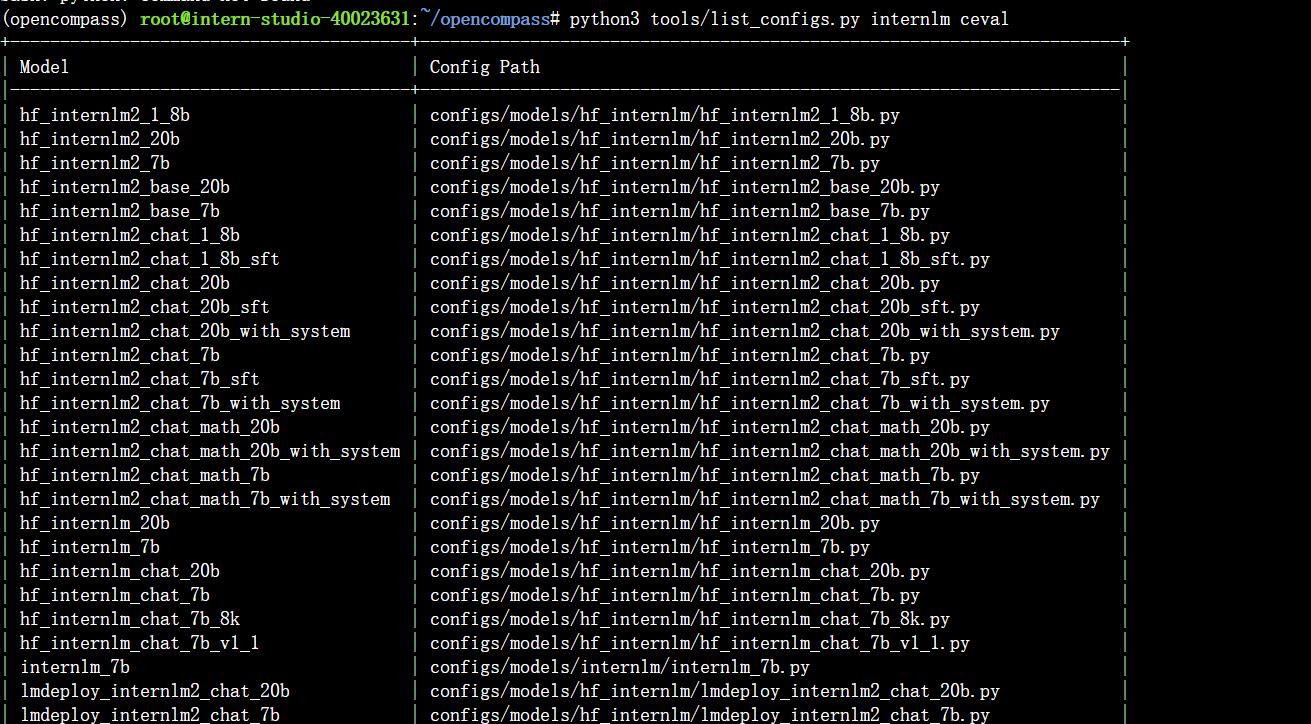

评测配置

评测主要涉及两个方面:

- 数据集

- 模型

调用模型的时候还可以区分调用方式是直接解析或是API

数据集准备

cp /share/temp/datasets/OpenCompassData-core-20231110.zip /root/opencompass/

unzip OpenCompassData-core-20231110.zip

安装opencompass

按照教程源码安装即可。

这里介绍下从pip安装:

pip install opencompass==0.2.3

源码提供config文件和评测配置,pip安装的包里没有,需要再次拷贝对应的配置和data到指定位置。

按照配置安装之后可以得到如下输出

打开hf模型对应的文件configs/models/hf_internlm/hf_internlm2_chat_1_8b.py,替换模型路径

# path="internlm/internlm2-chat-1_8b", hugging face 路径,需要下载

# tokenizer_path='internlm/internlm2-chat-1_8b', hugging face路径,需要下载

path="/share/new_models/Shanghai_AI_Laboratory/internlm2-chat-1_8b",

tokenizer_path='/share/new_models/Shanghai_AI_Laboratory/internlm2-chat-1_8b',

评测模型

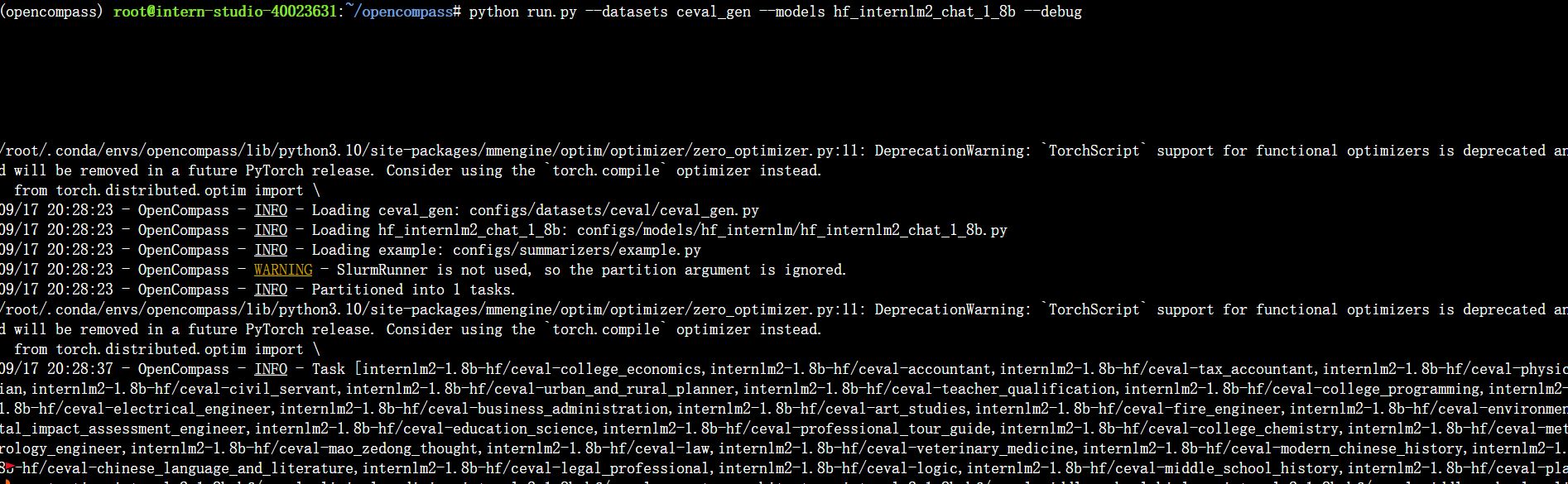

python run.py --datasets ceval_gen --models hf_internlm2_chat_1_8b --debug

这一步建议把资源调到30%,然后batchsize改大点,不然非常非常的缓慢,一不小心一两小时就过去了。

最终结果见 评测结果

从API评测

API准备

首先把llmdeploy装上。

使用llmdeploy的api模式启动server

CUDA_VISIBLE_DEVICES=0 lmdeploy serve api_server /share/new_models/Shanghai_AI_Laboratory/internlm2-chat-1_8b --server-port 23333 --api-keys internlm2

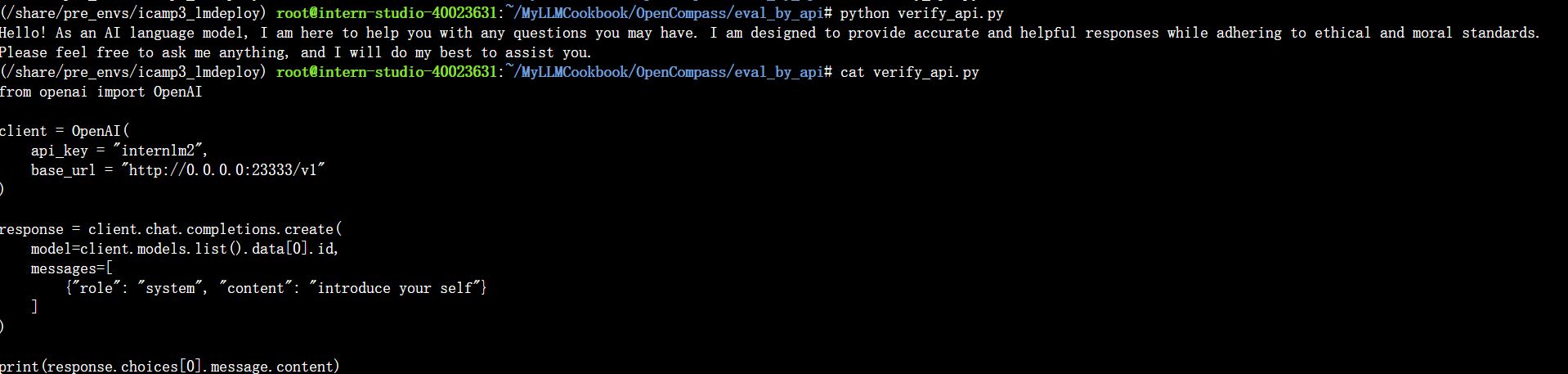

启动成功后执行脚本验证

from openai import OpenAI

client = OpenAI(

api_key = "internlm2",

base_url = "http://0.0.0.0:23333/v1"

)

response = client.chat.completions.create(

model=client.models.list().data[0].id,

messages=[

{"role": "system", "content": "introduce your self"}

]

)

print(response.choices[0].message.content)

验证结果

OpenCompass 配置更改

更改配置如下

from mmengine.config import read_base

from opencompass.models.turbomind_api import TurboMindAPIModel

from opencompass.partitioners import NaivePartitioner

from opencompass.runners.local_api import LocalAPIRunner

from opencompass.tasks import OpenICLInferTask

api_meta_template = dict(

round=[

dict(role='HUMAN', api_role='HUMAN'),

dict(role='BOT', api_role='BOT', generate=True),

],

reserved_roles=[dict(role='SYSTEM', api_role='SYSTEM')],

)

with read_base():

from ..summarizers.medium import summarizer

from ..datasets.ceval.ceval_gen import ceval_datasets

datasets = [

*ceval_datasets,

]

models = [

dict(

abbr='internlm2',

type=TurboMindAPIModel,

api_key='internlm2', # API key

api_addr='http://localhost:23333', # Service address

rpm_verbose=True, # Whether to print request rate

meta_template=api_meta_template, # Service request template

query_per_second=5, # Service request rate

max_out_len=1024, # Maximum output length

max_seq_len=4096, # Maximum input length

run_cfg=dict(num_gpus=1, num_procs=1),

end_str='<eoa>',

temperature=0.01, # Generation temperature

batch_size=8, # Batch size

retry=3, # Number of retries

)

]

work_dir = "outputs/api_internlm2/"

OpenCompass 版本检查

在0.2.3的版本里,TurboMindAPIModel不会把key传进去,需要手动改下API的实现:

# in opencompass/opencompass/models/turbomind_api.py

def __init__(self,

api_addr: str = 'http://0.0.0.0:23333',

max_seq_len: int = 2048,

meta_template: Optional[Dict] = None,

end_str: Optional[str] = None,

api_key: str = '',

**kwargs):

super().__init__(path='',

max_seq_len=max_seq_len,

meta_template=meta_template)

from lmdeploy.serve.openai.api_client import APIClient

self.chatbot = APIClient(api_addr, api_key=api_key)

执行评测

直接在源码路径下执行

python run.py config.py

或者在pip安装的包路径下执行

python utils/run.py config.py

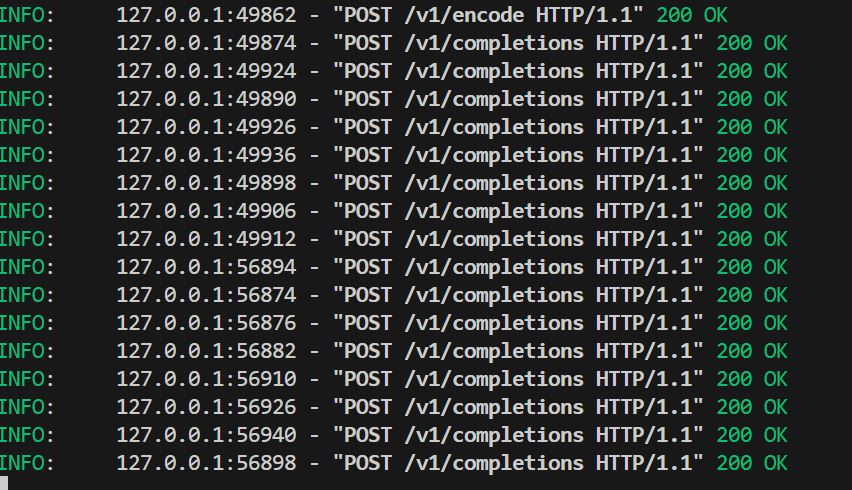

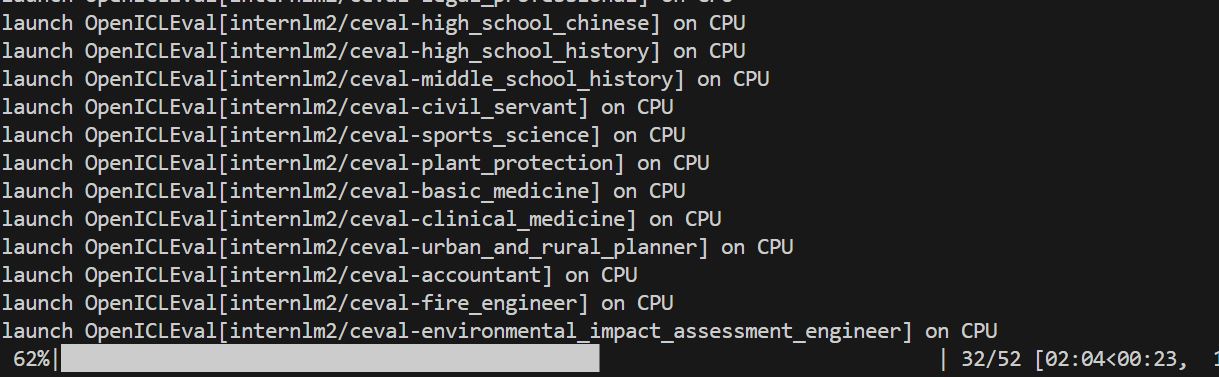

就可以开始评测。运行过程截图如下。

Server端可以看到请求

运行结果和完整脚本见: