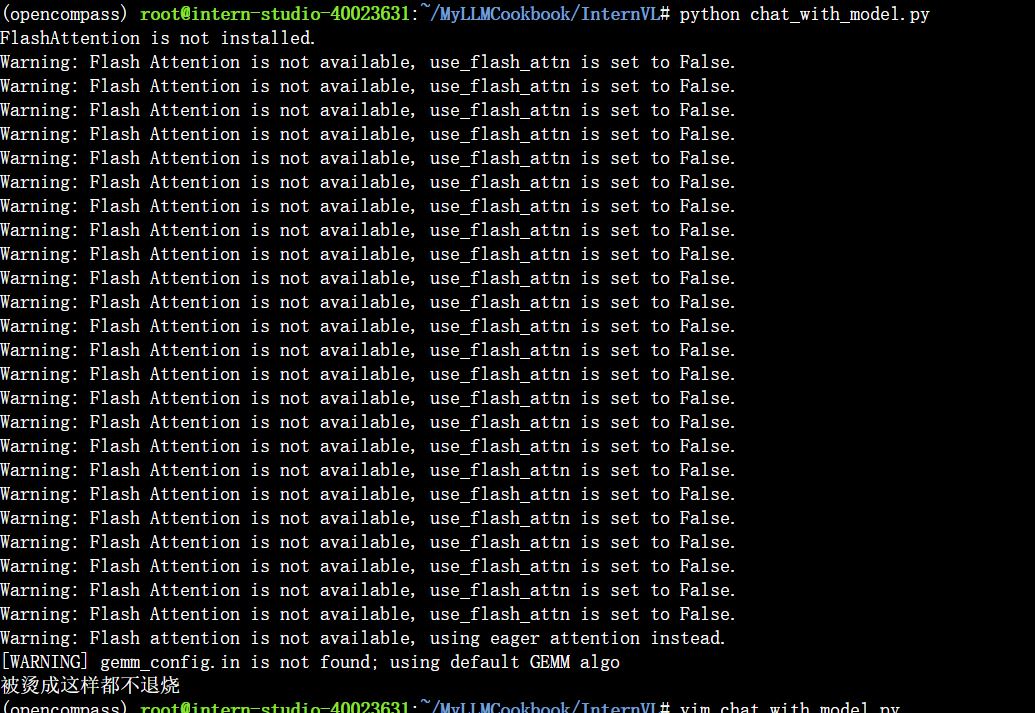

启动原始模型

qlora微调配置

微调使用qlora

配置分为五部分,需要修改的只有Settings部分,修改成自己的模型和数据地址。

path = '/root/model/InternVL2-2B'

# Data

data_root = '/root/MyLLMCookbook/InternVL/image/'

data_path = data_root + 'ex_cn.json'

image_folder = data_root

prompt_template = PROMPT_TEMPLATE.internlm2_chat

max_length = 6656

# Scheduler & Optimizer

batch_size = 4 # per_device

accumulative_counts = 4

dataloader_num_workers = 4

max_epochs = 6

optim_type = AdamW

# official 1024 -> 4e-5

lr = 2e-5

betas = (0.9, 0.999)

weight_decay = 0.05

max_norm = 1 # grad clip

warmup_ratio = 0.03

执行微调

这步执行较久

NPROC_PER_NODE=1 xtuner train \

qlora_finetune.py --work-dir /root/work_dir/internvl_ft_run_8_filter --deepspeed deepspeed_zero1

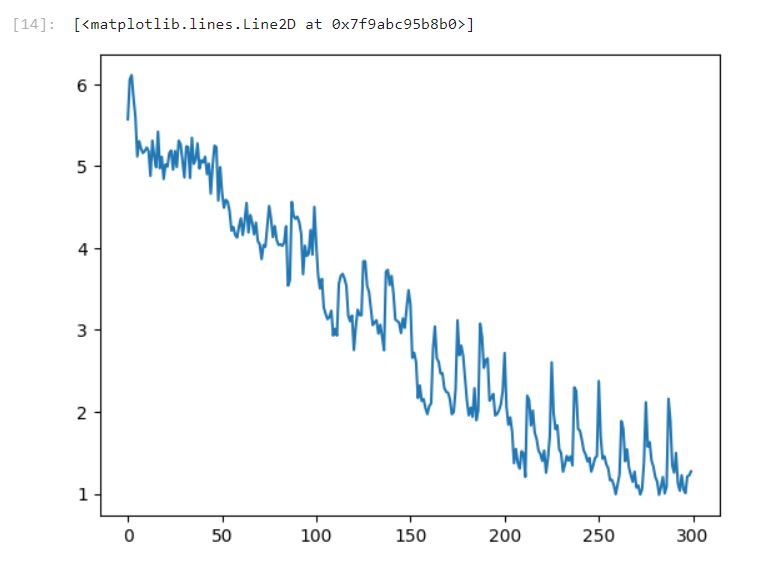

loss可视化结果如下:

有点震荡,但是逐步收敛了

合并权重

这里把结果输出到原模型,这样聊天脚本不用更改。

python3 convert_to_offical.py qlora_finetune.py ${work_dir} /root/model/InternVL2-2B/