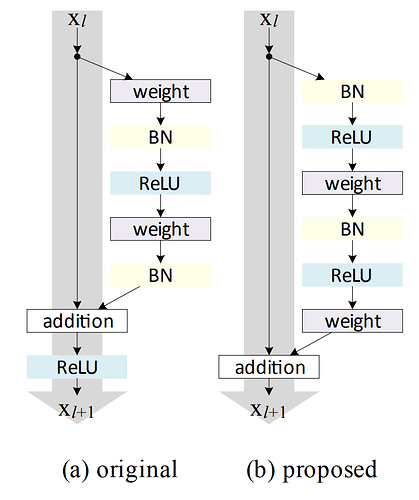

ResNet(残差神经网络):用于深层网络学习,主要用跳连接,允许输入信号绕过一个或多个层直接传递到后面的层。这种结构使得网络能够学习到恒等映射,从而更容易优化。

import torch

from torch import nn

from torch.nn import functional as F

from d2l import torch as d2l

# 定义残差块

class Residual(nn.Module):

def __init__(self, input_channels, num_channels, use_1x1conv=False, strides=1):

super().__init__()

self.conv1 = nn.Conv2d(input_channels, num_channels, kernel_size=3, padding=1, stride=strides)

self.conv2 = nn.Conv2d(num_channels, num_channels, kernel_size=3, padding=1)

if use_1x1conv:

self.conv3 = nn.Conv2d(input_channels, num_channels, kernel_size=1, stride=strides)

else:

self.conv3 = None

self.bn1 = nn.BatchNorm2d(input_channels)

self.bn2 = nn.BatchNorm2d(num_channels)

def forward(self, X):

Y = self.conv1(F.relu(self.bn1(X)))

Y = self.conv2(F.relu(self.bn2(Y)))

if self.conv3 is not None:

X = self.conv3(X)

Y += X

return F.relu(Y)

# 实现ResNet模型

def resnet_block(input_channels, num_channels, num_residuals, first_block=False):

blk = []

for i in range(num_residuals):

if i == 0 and not first_block:

blk.append(Residual(input_channels, num_channels,

use_1x1conv=True, strides=2))

# 将通道数翻倍,并将高度和宽度减半

else:

blk.append(Residual(num_channels, num_channels))

return blk

b1 = nn.Sequential(nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3),

nn.BatchNorm2d(64), nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

b2 = nn.Sequential(*resnet_block(64, 64, 2, first_block=True))

b3 = nn.Sequential(*resnet_block(64, 128, 2))

b4 = nn.Sequential(*resnet_block(128, 256, 2))

b5 = nn.Sequential(*resnet_block(256, 512, 2))

net = nn.Sequential(b1, b2, b3, b4, b5, nn.AdaptiveAvgPool2d((1, 1)), nn.Flatten(), nn.Linear(512, 10))

# 训练模型

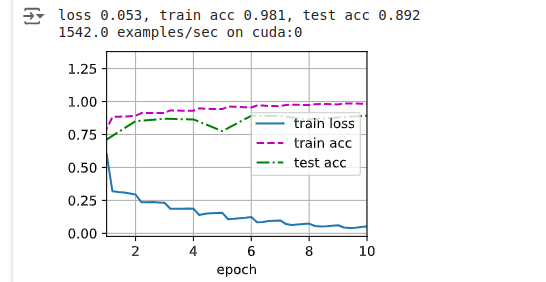

lr, num_epochs, batch_size = 0.05, 10, 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size, resize=96)

d2l.train_ch6(net, train_iter, test_iter, num_epochs, lr, d2l.try_gpu())

d2l.plt.show()