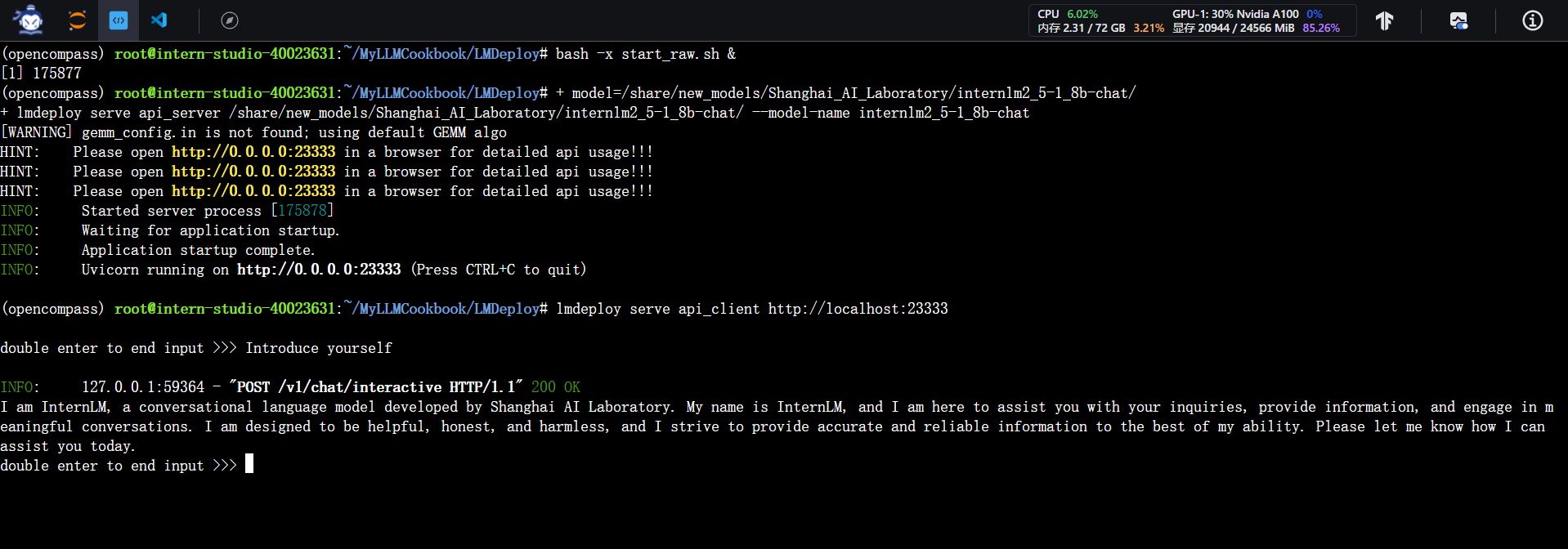

启动未量化模型

执行量化

model=/share/new_models/Shanghai_AI_Laboratory/internlm2_5-1_8b-chat/

# --calib-dataset 'ptb' \

lmdeploy lite auto_awq \

${model} \

--calib-dataset ptb \

--calib-samples 128 \

--calib-seqlen 2048 \

--w-bits 4 \

--w-group-size 128 \

--batch-size 1 \

--search-scale False \

--work-dir /root/models/internlm2_5-1_8b-chat-w4a16-4bitp

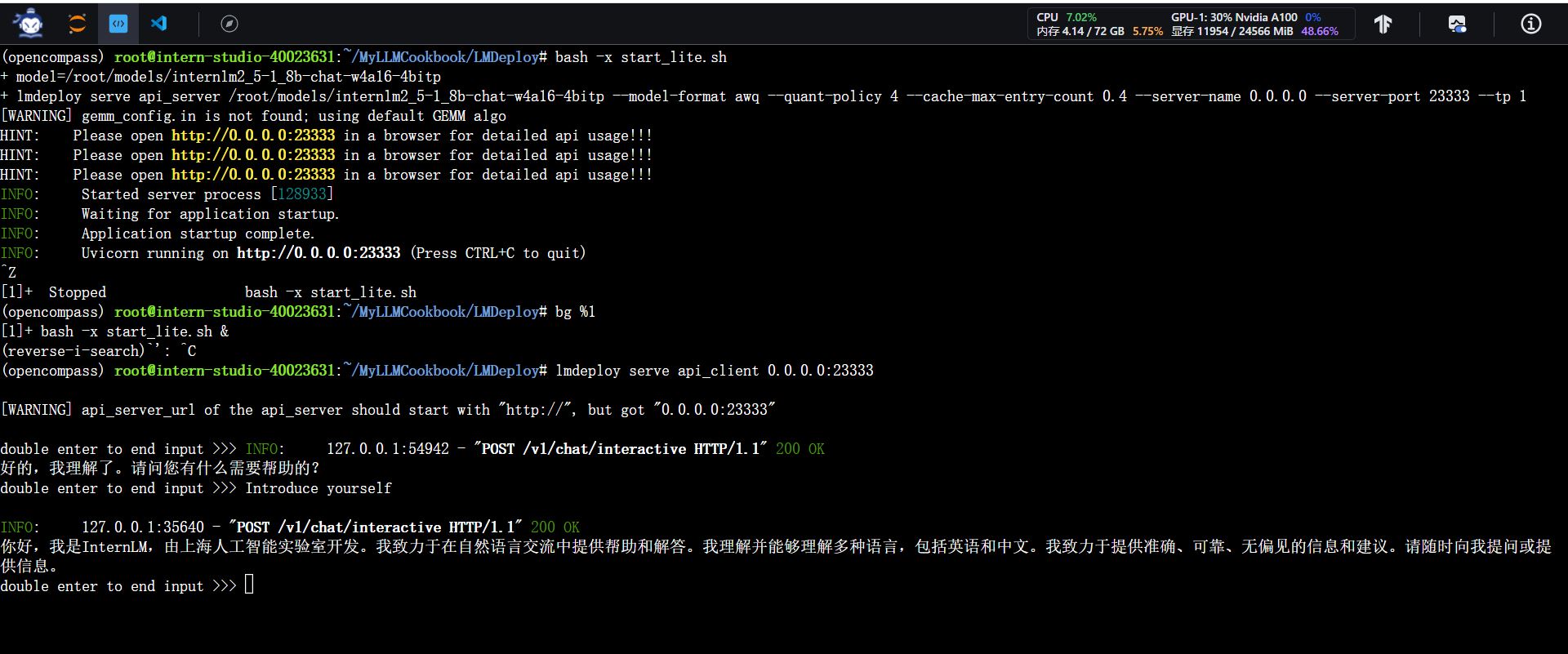

启动量化后的模型

model=/root/models/internlm2_5-1_8b-chat-w4a16-4bitp

lmdeploy serve api_server \

${model} \

--model-format awq \

--quant-policy 4 \

--cache-max-entry-count 0.4\

--server-name 0.0.0.0 \

--server-port 23333 \

--tp 1

(opencompass) root@intern-studio-40023631:~/MyLLMCookbook/LMDeploy# du -hs /root/models/internlm2_5-1_8b-chat-w4a16-4bitp/